Jaeseok Kim

Postdoc researcher @ Assistive Robotics Laboratory

Supervised Learning, Computer Vision & Robotics

|

Grasping Complex-Shaped and Thin Objects Using a Generative Grasping Convolutional Neural Network

Jaeseok Kim, Olivia Nocentini, Muhammad Zain Bashir,Filippo Cavallo, We proposes an architecture that combines the Generative Grasping Convolutional Neural Network (GG-CNN) with depth recognition to identify optimal grasp poses for complex and thin objects. The GG-CNN is trained on a dataset with data augmentation and RGB images. A segment of the tool is extracted using color segmentation, and the average depth is calculated. Different encoder-decoder models are applied and evaluated using Intersection Over Union (IOU), and the proposed architecture is validated through real-world grasping and pick-and-place experiments, achieving success rates of over 85.6% for seen surgical tools and 90% for unseen surgical tools. |

|

|

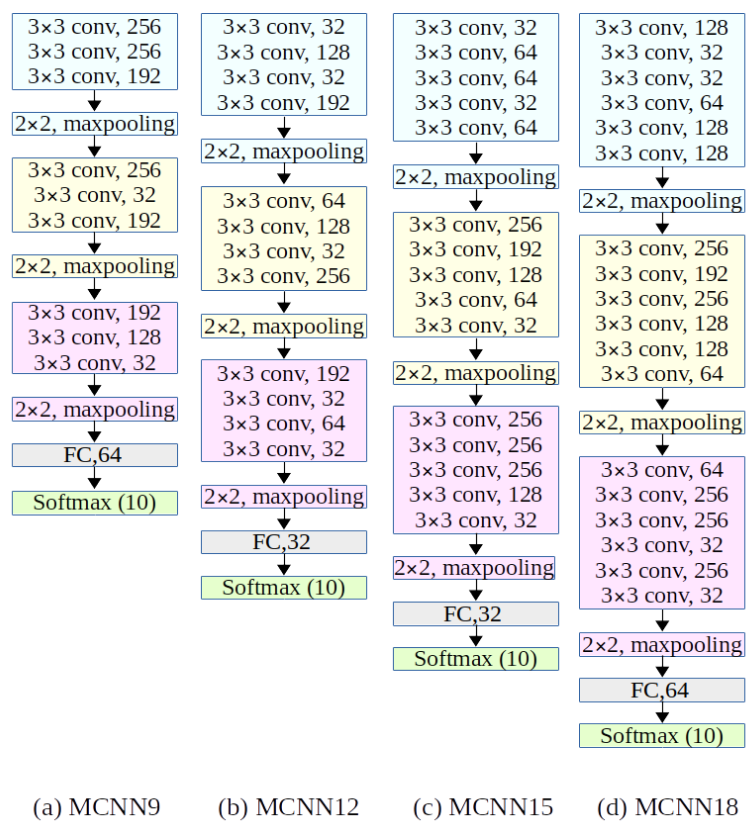

Image Classification Using Multiple Convolutional Neural Networks on the Fashion-MNIST Dataset

Olivia Nocentini, Jaeseok Kim, Muhammad Zain Bashir, Filippo Cavallo We introduces the design and implementation of a soft and compliant 3D printed gripper called SurgGrip, which is intended for grasping various thin-flat surgical instruments. SurgGrip consists of resilient mechanisms, flat fingertips with mortise and tenon joints, soft pads, and a four-bar linkage with a leadscrew mechanism that enables precise finger movements. The gripper can be autonomously manipulated using computer vision, and the experimental results show that SurgGrip can detect, segment, and grasp surgical instruments with a 67% success rate. |

|

Optimal Grasp Pose Estimation for Pick and Place of Frozen Blood

Bags based on Point Cloud processing and Deep learning Strategies

using Vaccum Grippers

Muhammad Zain Bashir, Jaeseok Kim, Olivia Nocentini, Filippo Cavallo We presents different strategies for computing optimal grasp poses for vacuum grippers, using 3D vision, to perform automatic pick and place operations of frozen blood bags, which typically have curved or irregular surfaces. The proposed methods process RGB-D data to search for local flat patches on the bag surfaces that act as optimal grasp candidates when using vacuum grippers. The strategies differ in how they calculate the best grasp point, with all methods achieving an average success rate of above 80% on a pick and place task, as validated and compared through real-world experiments. |

|

|

SurgGrip: a compliant 3D printed gripper for vision-based grasping of surgical thin instruments

Jaeseok Kim, Anand Kumar Mishra, Lorenzo Radi, Muhammad Zain Bashir, Olivia Nocentini, Filippo Cavallo, We introduces the design and implementation of a soft and compliant 3D printed gripper called SurgGrip, which is intended for grasping various thin-flat surgical instruments. SurgGrip consists of resilient mechanisms, flat fingertips with mortise and tenon joints, soft pads, and a four-bar linkage with a leadscrew mechanism that enables precise finger movements. The gripper can be autonomously manipulated using computer vision, and the experimental results show that SurgGrip can detect, segment, and grasp surgical instruments with a 67% success rate. |

|

|

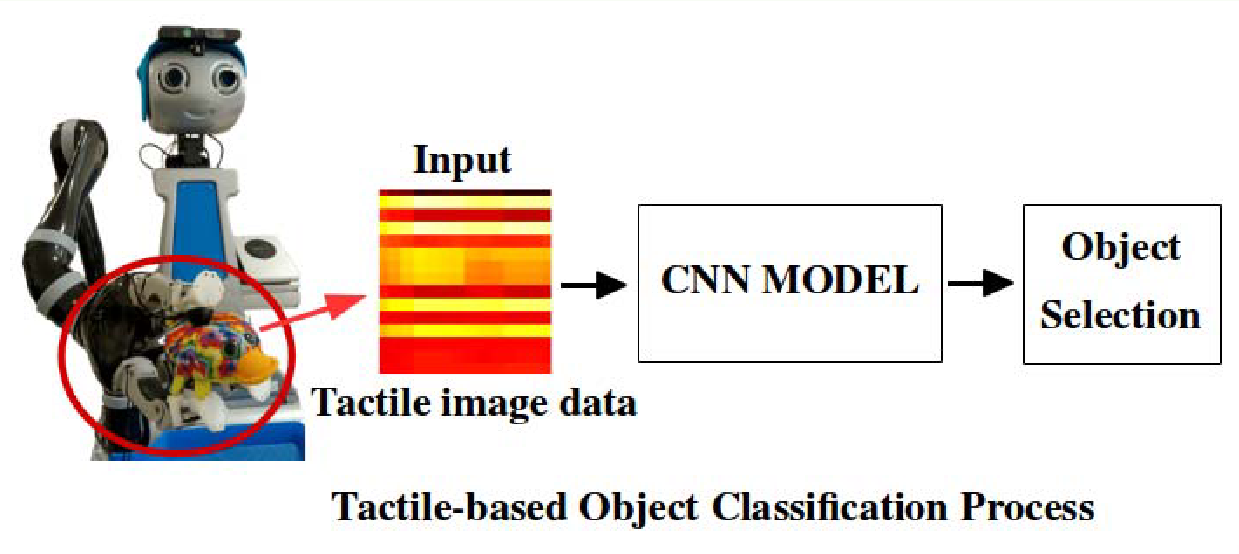

The Impact of Data Augmentation on Tactile-Based Object Classification Using Deep Learning Approach

Philip Maus, Jaeseok Kim, Olivia Nocentini, Muhammad Zain Bashir, Filippo Cavallo We presents a machine learning-based approach for tactile object classification, which is important for safe and versatile interaction between humans and objects. We use a 3-finger-gripper mounted on a robotic manipulator to collect a custom dataset of 20 classes and 2000 samples and augment this dataset using various methods and investigate the effect on object recognition performance for different neural network architectures. The results show that data augmentation significantly improves classification accuracy, with the best performance achieved by a D-CNN trained on an augmented dataset derived from scaling and time warping augmentation. |

|

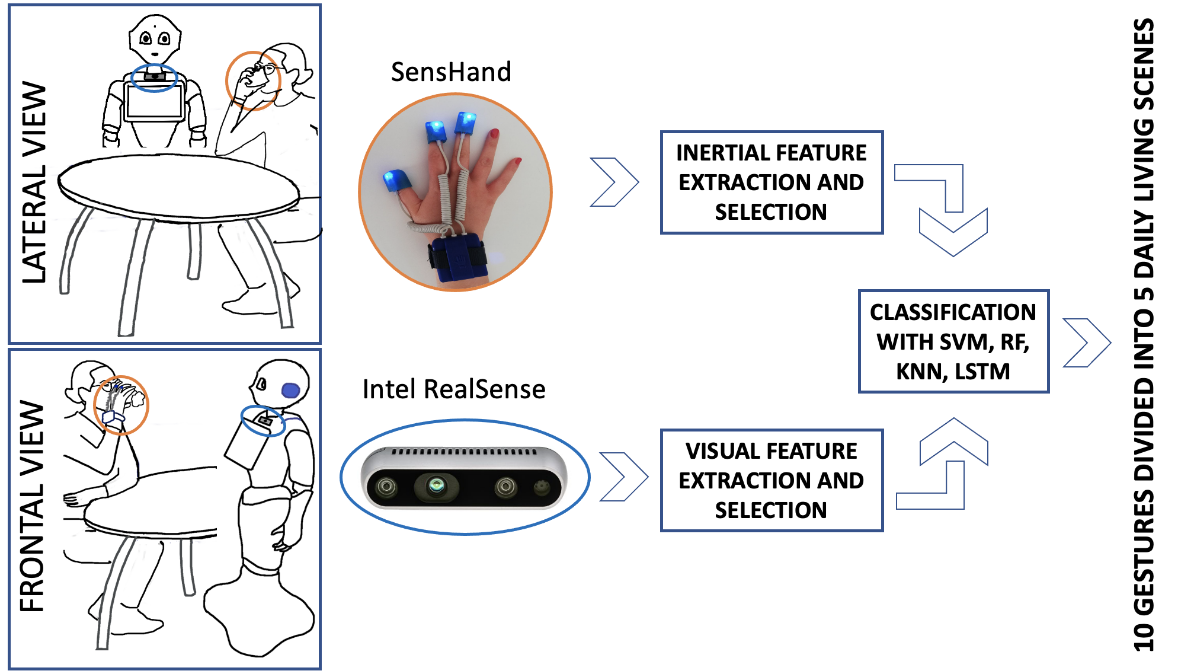

Daily gesture recognition during human-robot

interaction combining vision and wearable

systems

Laura Fiorini, Federica G.Cornacchia Loizzo, Alessandra Sorrentino, Jaeseok Kim, Erika Rovini, Alessandro Di Nuovo, Filippo Cavallo We article presents a system that uses a Pepper robot, mounted with an RGB-D camera and an inertial device called SensHand, to recognize human gestures for improving human-robot cooperation. The system acquired data from twenty people performing five daily living activities, and the acquired data were off-line classified using four algorithms to evaluate the sensor fusion approach's recognition abilities. The results showed significant improvement in accuracy when fusing camera and sensor data, and the system performed well in recognizing transitions between gestures. |

|

Cleaning tasks knowledge transfer between heterogeneous

robots: a deep learning approach

Jaeseok Kim, Nino Cauli, Pedro Vicente, Bruno Damas, Alexandre Bernardino, José Santos-Victor, Filippo Cavallo We discusses the development of autonomous service robots capable of adapting to changes in task and sensory feedback. A convolutional neural network is used to generalize demonstrations for cleaning tasks to different dirt and stain patterns, allowing for knowledge transfer between robots operating in different environments. Data augmentation techniques and image transformations are used to achieve robustness to changes in robot posture and illumination. The success of this approach is demonstrated through the transfer of knowledge from a robot trained in Lisbon to the DoRo robot in Peccioli, Italy. |

|

|

An Innovative Automated Robotic System based on Deep Learning Approach for Recycling Objects

Jaeseok Kim, Olivia Nocentini, Marco Scafuro, Raffaele Limosani, Alessandro Manzi, Paolo Dario and Filippo Cavallo This project describes an industrial robotic recycling system that integrates image processing, grasping, motion planning, and object material classification to sort waste objects into carton and plastic categories. The system uses a multifunctional grasping tool, depth and RGB cameras, segmentation, grasping points, and a deep learning approach with a modified LeNet model for object recognition. The system was evaluated with success rates of 86.09% and 90% for carton and plastic samples grasped using suction and 96% classification accuracy on test samples. |

|

|

Integration of an Autonomous System with Human-in-the-Loop for Grasping an Unreachable Object in the Domestic Environment

Jaeseok Kim, Raffaele Limosani and Filippo Cavallo Researchers have developed an autonomous system for domestic robot manipulation that combines human cognitive skills with robot behaviors. The system can grasp multiple objects of random sizes at known and unknown table heights using three manipulation strategies. The robot can make independent decisions in random scenarios and can be controlled using a visual or voice interface. |

|

|

Autonomous table-cleaning from kinesthetic demonstrations using Deep

Learning

Nino Cauli2, Pedro Vicente2, Jaeseok Kim, Bruno Damas, Alexandre Bernardino, Filippo Cavallo and Jose Santos-Victor This project describes an autonomous system that teaches a robot to perform table-cleaning tasks using a deep convolutional network to learn parameters of a Gaussian Mixture Model representing hand movement. The system is trained with 2D table-space trajectories and images, enabling the robot to perform cleaning arm-movements by considering an augmented data set for robustness. The system was tested on the iCub robot, demonstrating a cleaning behavior similar to that of human demonstrators. |

|

|

“iCub, clean the table!” A robot learning from demonstration approach

using Deep Neural Networks

Jaeseok Kim, Nino Cauli2, Pedro Vicente2, Bruno Damas, Filippo Cavallo and Jose Santos-Victor We developed a method to teach robots how to clean using kinesthetic demonstrations. We use a deep neural network to automatically extract reference frames from the robot camera images, enabling the robot to identify and perform specific cleaning tasks. Our approach allows for successful cleaning of a table with different types of dirt. |

|

|

Teleoperation of the Care-O-bot 3 with

force feedback using the DLR-HMI

Jaeseok Kim, Ribin Balachandran and Jeehwan Ryu The Care-O-bot 3 is a robot that can help elderly and disabled individuals with tasks like carrying things and running errands. To ensure the robot operates safely, a teleoperation system was created that allows humans to remotely control it. The system was tested and proven to work well, and solutions were proposed to solve any problems that come up while using the robot. |

|

|

Shared Teleoperation of a Vehicle with a Virtual Driving Interface

Jaeseok Kim and Jeehwan Ryu Autonomous vehicles face challenges operating in real-world environments. To address this, a new method called Vehicle Teleoperation has been developed that creates 3D maps and environmental data to guide autonomous vehicles. This method uses two approaches to create waypoints: auto-generation based on time and direct human input. The method has been tested using two algorithms and was found to be effective in controlling the vehicle even without loaded map data. This method could improve the safety and reliability of autonomous vehicles. |